Turkey may have been behind the battlefield deployment of a military-grade autonomous drone that may have marked an historic and chilling first if its artificial intelligence-based weapons system, essentially operating with a mind of its own, was used to kill.

That’s the disturbing conclusion of Zachary Kallenborn, writing for the Bulletin of the Atomic Scientists, following the publication of a United Nations report about a March 2020 skirmish in the military conflict in Libya in which a ‘killer robot’ drone, known as a lethal autonomous weapons system—or LAWS—made its wartime debut. The report, however, does not explicitly determine if the LAWS, a Kargu-2 attack drone made by Turkish company STM, killed anyone or establish if it was operating in autonomous or manual mode.

"If anyone was killed in an autonomous attack, it would likely represent an historic first known case of artificial intelligence-based autonomous weapons being used to kill," wrote Kallenborn.

Military-grade autonomous drones can pick their own targets and kill without the assistance or direction of a remote human operator. Such weapons are known to be in development, but there have been no confirmed cases of autonomous drones killing fighters on the battlefield.

The Kargu-2 was reportedly used during fighting between the UN-recognised Government of National Accord (GNA) backed by Turkey and forces aligned with General Khalifa Haftar, backed by Russia. A report that examined the incident was prepared by the UN Panel of Experts on Libya.

"Logistics convoys and retreating [Haftar-affiliated forces] were subsequently hunted down and remotely engaged by the unmanned combat aerial vehicles or the lethal autonomous weapons systems such as the STM Kargu-2 ... and other loitering munitions," the panel behind the analysis wrote.

“Machine-learning”

The Kargu-2 can be operated both autonomously and manually. It is said to use "machine learning" and "real-time image processing" against its targets.

The UN report added: "The lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true 'fire, forget and find' capability."

"Fire, forget and find" refers to a weapon that once fired can guide itself to its target.

The Harop "kamikaze drone" (Image: Julian Herzog, CC-BY-SA 4.0).

Azerbaijan’s use of Turkish and Israeli armed drones in last autumn’s Second Nagorno-Karabakh War with Armenia is widely viewed by defence analysts as having given it a decisive advantage that led to a comprehensive victory. In September last year, The Times of Israel reported Hikmet Hajiyev, an adviser to Azerbaijan’s President Ilham Aliyev, as praising the Israeli Aerospace Industries’ Harop (or Harpy 2), a so-called kamikaze drone, also known as a loitering munition. Hajiyev described the Harop as “very effective,” said it was used in a “kamikaze” capacity on the battlefield and offered “a big ‘chapeau’ to the engineers who designed it”.

Hangs in the sky

The Harop is an anti-radiation drone that can hang in the sky before autonomously homing in on radio emissions before diving into the target and self-destructing. During the Nagorno-Karabakh conflict, there were reports of the drone causing demoralisation among Armenian troops. The Harop can operate fully autonomously or with a human-in-the-loop.

Not everyone in the world of defence is particularly concerned by the UN’s report on the use of the Kargu-2. "I must admit, I am still unclear on why this is the news that has gotten so much traction," Ulrike Franke, a senior policy fellow at the European Council on Foreign Relations, wrote on Twitter, as cited by NPR.

Franke noted that loitering munitions have been used in combat for "a while" and questioned whether the autonomous weapon used in Libya actually caused any casualties.

A global survey commissioned by the Campaign to Stop Killer Robots last year found that a majority of respondents— 62%—were opposed to the use of lethal autonomous weapons systems.

News

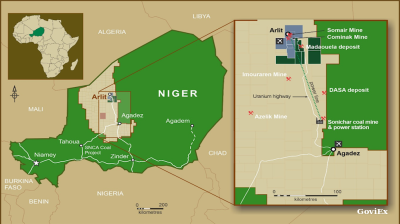

GoviEx, Niger extend arbitration pause on Madaouela uranium project valued at $376mn

Madaouela is among the world’s largest uranium resources, with measured and indicated resources of 100mn pounds of U₃O₈ and a post-tax net present value of $376mn at a uranium price of $80 per pound.

Brazil’s Supreme Court jails Bolsonaro for 27 years over coup plot

Brazil’s Supreme Court has sentenced former president Jair Bolsonaro to 27 years and three months in prison after convicting him of attempting to overturn the result of the country’s 2022 election.

Iran cleric says disputed islands belong to Tehran, not UAE

Iran's Friday prayer leader reaffirms claim to disputed UAE islands whilst warning against Hezbollah disarmament as threat to Islamic world security.

Kremlin puts Russia-Ukraine ceasefire talks on hold

\Negotiation channels between Russia and Ukraine remain formally open but the Kremlin has put talks on hold, as prospects for renewed diplomatic engagement appear remote. Presidential spokesman Dmitry Peskov said on September 12, Vedomosti reports.